There are signs of growing unease with impact measurement in the charity sector. At the most recent Association of Charitable Foundations conference the whole concept faced something of a backlash. At NPC, we’ve started to explore how to ensure that impact measurement is used to drive strategy, decision-making and learning, precisely because we’re concerned that often it isn’t. Most of all, I am worried that we’re not learning.

Perhaps this shouldn’t surprise us. While the last decade has seen acknowledgement of the potential importance of impact measurement, and a consequent increase in impact measurement activity, NPC’s Making an impact study in 2012 told us that the primary driver of impact measurement activity was a desire to meet funders’ requirements. Wanting to learn and improve services was only the fifth most important driver.

So what would happen if we reframed impact measurement to make learning the central purpose of evaluation and impact measurement? What capacities and approaches would organizations need to adopt to ensure they were able to deliver real learning through these practices? And how could their funders ensure they encouraged and supported learning?

Three stages of learning

Learning is a broad concept but here I want to concentrate on learning that leads to action, to new knowledge or skills being put to use in some way. With this focus on use, it is helpful to think about the timing of learning in the context of a programme or intervention we are delivering, or funding. We can make a distinction between three phases: learning about a programme we will deliver (or fund) in the future, during its delivery, and after it has been delivered.

These three different stages of learning all require different approaches. They can’t readily be addressed by the same set of evaluation or impact measurement tools.

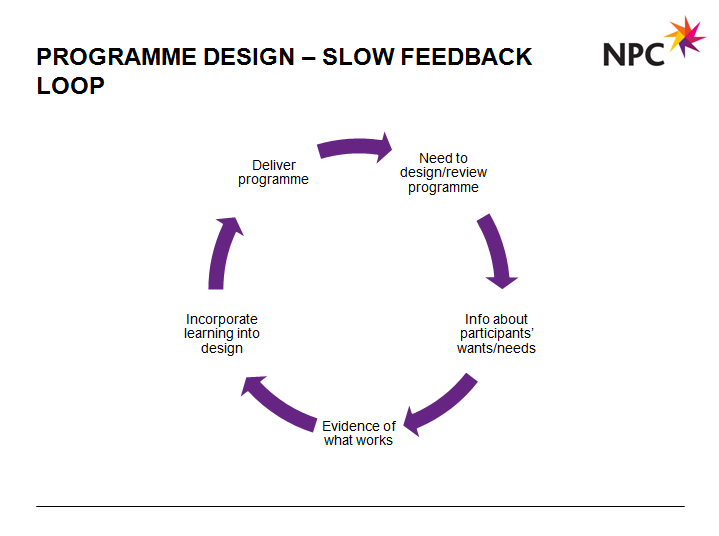

Before – a slow feedback loop

Before we design, or fund, a programme we need an approach that enables us to learn from the field, including our own previous work, to inform what we are going to do. What do we know about what works and what doesn’t? What do we know about the needs and wants of those we are working with, and how we might meet them? This is a formative process, incorporating this knowledge into the design of something we are yet to do. This is what we might call a slow feedback loop.

During – a rapid feedback loop

During delivery, we need an approach that listens and responds to constituents as we are working – that works in near to real time. This is an adaptive process, continuously comparing our plans and theories with people’s observed reality and making course corrections as swiftly as possible. It is a rapid feedback loop.

After – a slow feedback loop again

After we have delivered a programme, we need to learn from what has happened. We need to synthesize what we have learned throughout the delivery stage and compare what actually happened with our initial expectations, and those of our constituents. We need then to review and analyse these findings in a way that can be fed back into the evidence base and the field’s knowledge. As with the pre-delivery stage, this is a slow feedback loop.

As we might also expect, these three stages require different capacities within the organizations (and their funders). Slow feedback loops lend themselves to researchers, evaluators and programme designers, who may operate at some distance from the front line. While they need to be plugged into constituents’ views, they also need to have ready access to the research literature and community. Isaac Castillo in his work on the Latin American Youth Centre in the US,[1] suggests that no service would ever be designed without drawing on the existing literature to show how it would be expected to work, and the evidence used to inform its structure.

Fast feedback loops, however, need to be running as close to the front line as possible. What is needed here is performance management – the real-time process of collecting data, conducting analysis, comparing to expectations, forming insights and then making changes as a result. Performance management is a concept that is far from new, but is only recently emerging in the discourse around impact and evaluation.[2]

Implications for funders

From a funder’s perspective, two sets of related questions emerge from this approach. First, how do funders ensure that they support these elements of learning within the organizations and programmes they back? Second, how do they apply the same approach to learning to their own work?

‘Funders that commit to a learning approach will allow grantees significant flexibility during the period of their support – allowing them to adapt to their real-time learning.’

Funders with a commitment to learning would want to ensure that they provide the resources, encouragement and flexibility to allow their grantees to learn during each of these three stages. This could include seeking evidence of learning in applicants’ approaches to programme design and supporting knowledge-sharing once programmes have been delivered. But perhaps most importantly, funders that commit to a learning approach will allow grantees significant flexibility during the period of their support – allowing them to adapt to their real-time learning. Rigid funding frameworks that don’t allow for change undermine learning just as much as a lack of financial resources.

How funders embed learning in their own work depends on their approach. The Inspiring Impact paper Funders’ principles and drivers of good impact practice, developed in 2013 by a group of grantmaking funders, suggests that funders can have three different purposes in impact measurement: understanding the difference they make; learning from grantees and themselves; and making the best use of resources. All three of these purposes can be seen through the lens of learning, and we can expect to see the same three stages of learning as we expect to see among charities delivering programmes: learning before, during and after delivery of a programme.

Increasing the focus on learning

Learning is critical if we are to put into practice the insights we gain through impact measurement. It’s essential for charities that want to do the best job possible, and for funders that want to make the best use of their resources. It’s also a very active form of accountability to those we aim to serve: if we learn and improve we move closer to doing the best we can on others’ behalf, and being able to share how we’ve changed to demonstrate that accountability.

This brings me back to my original point: we need to ensure that learning is the explicit primary purpose of impact measurement.

For charities, this might mean being clearer about the capacities and approaches they need in place to ensure that both fast and slow feedback loops work well. Those that have seen evaluation and impact measurement as activities performed at the centre of the organization, facing external audiences, may need to add a new set of capacities much closer to the front line, building data collection, analysis and response into service delivery and management functions.

‘Ultimately, focusing on learning will ensure that what we do under the banner of impact measurement and evaluation is purposeful: it creates knowledge that can be put to use, knowledge that we have already thought about how we will use.’

For funders, a focus on learning could mean seeking out these capacities in applicants and supporting them in grantees, as well as building their own learning systems. If that results in a rebalancing from applicants demonstrating impact to those demonstrating learning, it will be extremely interesting to see how this plays out. If funders ask applicants to tell them about a change they’ve made in their work in response to what they’ve learned rather than asking them to show evidence of impact, my hunch is that their selection processes will produce significantly different results. Furthermore, the organizational characteristics that reflect a readiness to learn are likely to differ from those that reflect demonstrable impact.

Ultimately, focusing on learning will ensure that what we do under the banner of impact measurement and evaluation is purposeful: it creates knowledge that can be put to use, knowledge that we have already thought about how we will use. If we want to avoid impact measurement being a subset of charities’ marketing activity, I believe a focus on learning is crucial. If we’re learning as charities, we know we can be better next year than we were this year. If we’re learning as funders, we know we’re getting better at putting our increasingly precious resources to the best use in a sector that desperately needs us to.

Tris Lumley is director of development at NPC. Email Tris.Lumley@thinkNPC.org

Twitter @trisml

Footnotes

- ^ NPC (2011), A journey to greater impact.

- ^ See Emma Tomkinson’s recent Delivering the promise of social outcomes: the role of the performance analyst.

Comments (0)